It doesn’t take a brilliant futurist to predict that water flows downhill: If a technology allows people to get results faster, all else being equal, it’s going to win out.

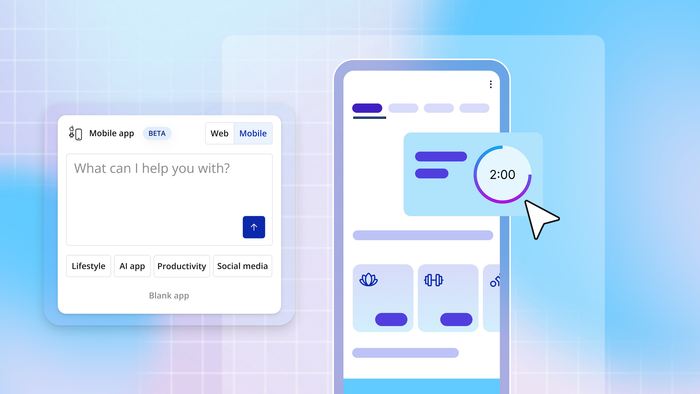

That’s exactly what’s happening as generative AI and no-code combine — from AI chatbots that give you intelligent building instructions to tools that generate full-fledged applications for you to iterate on. As we speak, the Bubble team is actively working on bringing new AI features into our own platform’s experience.

As I’ve written about before, I expect this shift to usher in a whole new phase of tech innovation. If we play our cards right, it can also become an engine for massive empowerment and decentralization (which, of course, is why we founded Bubble in the first place).

But just because the rise of AI-driven no-code is inevitable doesn’t mean that it will inevitably lead to positive change. That will only happen if the no-code industry does it on purpose — by placing that goal at the forefront, understanding the risks inherent to AI-driven tools, and building them intentionally.

Tipping the scales toward empowerment

The rise of no-code is a major corrective to a bad pathway in technological development. A tiny number of people built the products that shape the lives of the rest of humanity — Facebook and Twitter (or, uh, X, I guess), Google, Amazon. Power has become centralized to a small handful of elite specialists with a deep technical vocabulary.

The counter-push has always been new innovation: Tens of thousands of people have leveraged their own lived experiences to build applications that serve niche audiences filled with people like them — solutions that the folks at Google HQ, excellent humans as they are, would have never thought of in a million years.

But it’s an uneasy balance, and big tech still stands tall. No-code is a powerful force that can help balance out the scales: When anyone from non-technical tinkerers to supercharged code developers can take a good idea to market, the advantage of having massive investment and scale lessens.

AI-driven no-code will take this even further — if we build the tools conscientiously.

Mitigating the risks of AI in no-code tools

Today’s AI technology can produce extremely impressive results, but it doesn’t have independent judgment, agency, or a deep understanding of what it’s creating in the way a human user does. Software development requires a deep empathy for the users of the product, out-of-context knowledge about why the software exists and how it will be used, and creative ingenuity that goes beyond replicating patterns that people have solved before. This is true for no-code as well as code, and it’s something that AI won’t replace — short of AI achieving sentience and becoming a peer species to humans, which we’re nowhere near (and will introduce much bigger issues than the future of software development!).

Still, there are certain concerns inherent to AI that could cross over into AI-driven no-code tools, if we aren’t careful. I believe the big ones are:

- Intellectual property + copying: Is this going to replace human creators by allowing people to make cheap knock-offs of their work?

- Limited, biased training data making it easy to build some applications but not others

Intellectual property

One of the biggest ethical concerns facing anyone building AI-driven no-code platforms is accidentally giving users a tool that rips off professional app developers: Models train on the developers’ work, and then people use the tool to quickly churn out knock-offs of that work, undercutting the app creators’ business. This has been a problem for digital artists since the advent of publicly accessible AI tools (see: DeviantArt).

But it doesn’t have to be that way. Society’s norms and laws are still evolving to address IP concerns, but at the heart of the matter, I think the true ethical distinction is learning from vs copying. This problem isn’t unique to AI — humans can rip each other off, too. But we can also be inspired by and learn from each other in an additive way. It may sound strange to talk about an AI computer program being “inspired,” but behind the scenes, the process AI models use to learn is best characterized that way. It’s a generalization — seeing patterns, finding commonalities. That being said, AI models can “overtrain,” where they aren’t just learning about the structure of their subject matter, but actually embedding very specific things about an individual creator’s work into their output.

From an AI developer standpoint, we can treat this as a software bug, approaching it at two levels: making sure the training process emphasizes abstraction and generalization, and then using adversarial testing and “red-teaming” to make sure you can’t target a specific creator’s output. It’s not going to be perfect, but an AI developer team committed to this goal can build a product that gets harder and harder to abuse over time.

Biased training data

The other concerning thing as AI intersects with no-code is the possibility it won’t actually tip those scales because the models will only be good at targeting the subset of applications it was trained on. That would supercharge some people’s development efforts while leaving those working on projects not envisioned by the people who originally built their tools behind. This is similar to the biased training data ethical concerns that other areas of AI deal with.

At Bubble, we have a lot of experience building tools that get used in ways not envisioned by us as the creators. We're constantly surprised by what our users do with Bubble, and that’s an important value that we want to make sure the age of GenAI doesn’t diminish.

Again, an AI developer team committed to tackling this problem can go a long way. Aligned with the solution to prevent copying, we can build a more flexible tool that adapts to domains it hasn’t experienced before by making sure the training process sufficiently abstracts from the training data, teaching the model general things about software development rather than specific use cases.

Of course, it’s also important to feed AI systems a wide variety of training data so they don’t become “narrow-minded” — just like when we’re developing software products, we work closely with a diverse set of users. That way, we can avoid over-specializing for one or two use cases and losing the generality that makes no-code programming tools really powerful and transformative.

Bubble’s principles of responsible AI

It was important to us that we took the time with GenAI to feel confident that we were mitigating the risks above as much as we could — and we want the real value to be in how AI combines with Bubble’s full-stack capabilities, specifically. Our AI team spent the summer researching, testing, and exploring what’s possible, and now we have a solid roadmap in place. (We’ll be talking more about that at BubbleCon next month, by the way.)

Along the way, we’ve developed some internal rules of engagement for how we work on AI to do our best to realize our vision of empowerment. I share them here in the interest of transparency, to show you how seriously we’re taking this, and maybe also to offer some guideposts to others exploring the idea of training AI tools (including those building them on Bubble itself).

Repeatable work

The first, fundamental principle is that all the work we do needs to be repeatable. Because of the high cost of training AI tools, and the fact research can lead to complicated models that are iterated and changed over time, it’s easy for a team to go down an evolutionary path where changing direction means throwing away all the work that was done before. In contrast, Bubble’s AI team views its output not as models, but as repeatable processes for generating models. That way, we can recreate our work with an altered set of training data if we need to. We also focus on making sure our training procedures don’t become so costly that it would be prohibitive for us to re-run the process and inadvertently lock ourselves into a model.

This is really important because we — and the entire AI industry — are still learning. A lot of the risks and challenges discussed above can be mitigated with rapid technical iteration, careful testing and red-teaming, and changing up training data as necessary to make sure the resulting models do good rather than harm in the world. But that requires having the flexibility to iterate. It’s critical to make sure that flexibility is built in from day one.

Separating research from development

Our second, related rule of engagement, is to draw a line between research and development, and not to promote a model from one to the other without trust-and-safety and red-teaming reviews. We don’t expect our reviews to be 100% perfect — looking at the history of OpenAI, for example, people have always been able to find workarounds to ChatGPT’s safety precautions even after their internal testing. But this practice provides a baseline we can iteratively improve on, just as ChatGPT has grown iteratively safer over subsequent releases.

A commitment to data security

The final rule of engagement is to take data security and privacy seriously. This should go without saying, but it’s easy to imagine a situation where a team becomes caught up in the research process and loses hygiene standards for how they store, sanitize, and otherwise make sure any data they work with is protected. Committing to data security from day one is much easier than trying to retrofit safe practices after the fact, and it’s something we’ve invested in even in early stages of research.

This means going back to the IT basics: reliance on time-tested third-party technologies for storing and processing data, using controls like encryption appropriately, and relying on secure cloud environments to do our work in.

Along with the whole Bubble team, I am incredibly excited about AI-driven no-code’s potential to empower the next generation of founders, developers, and creators to build a digital world in their image, not in the image of a small handful of big tech developers. This industry moves fast, but we’re also moving responsibly and approaching AI-related projects with a humble, iterative mindset. That way, we can create something that will be incredibly transformative for the software industry and the world.

Build for as long as you want on the Free plan. Only upgrade when you're ready to launch.

Join Bubble